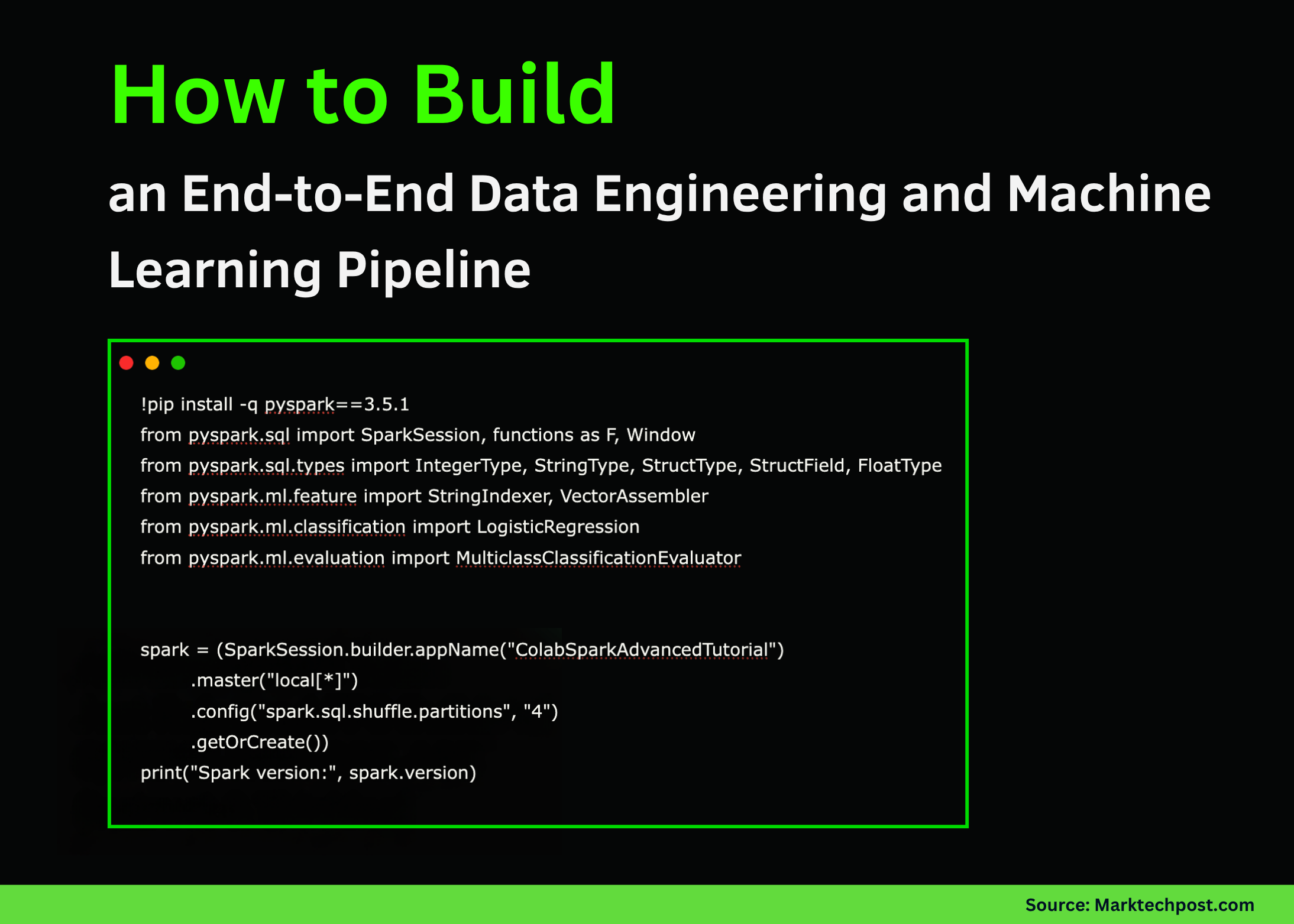

!pip set up -q pyspark==3.5.1

from pyspark.sql import SparkSession, capabilities as F, Window

from pyspark.sql.varieties import IntegerType, StringType, StructType, StructField, FloatType

from pyspark.ml.function import StringIndexer, VectorAssembler

from pyspark.ml.classification import LogisticRegression

from pyspark.ml.analysis import MulticlassClassificationEvaluator

spark = (SparkSession.builder.appName("ColabSparkAdvancedTutorial")

.grasp("native(*)")

.config("spark.sql.shuffle.partitions", "4")

.getOrCreate())

print("Spark model:", spark.model)

information = (

(1, "Alice", "IN", "2025-10-01", 56000.0, "premium"),

(2, "Bob", "US", "2025-10-03", 43000.0, "normal"),

(3, "Carlos", "IN", "2025-09-27", 72000.0, "premium"),

(4, "Diana", "UK", "2025-09-30", 39000.0, "normal"),

(5, "Esha", "IN", "2025-10-02", 85000.0, "premium"),

(6, "Farid", "AE", "2025-10-02", 31000.0, "primary"),

(7, "Gita", "IN", "2025-09-29", 46000.0, "normal"),

(8, "Hassan", "PK", "2025-10-01", 52000.0, "premium"),

)

schema = StructType((

StructField("id", IntegerType(), False),

StructField("title", StringType(), True),

StructField("nation", StringType(), True),

StructField("signup_date", StringType(), True),

StructField("revenue", FloatType(), True),

StructField("plan", StringType(), True),

))

df = spark.createDataFrame(information, schema)

df.present()